Example 2: laser effect

------------------------------- Distorting UVs -------------------------------

To start with, quick digression for

beginners:

We’ve seen

before how to remap

some texture coordinates using another texture.

If you want to animate a texture, the setup is very similar. Instead of plugging your distortion texture directly into the output texture, you add it to your texture coordinates beforehand.

What does that mean? Adding a value to texture coordinates means you are shifting the pixels around. 1 is the width and height of your texture.

Take a horizontal line.

Add 0 to it, it stays in place.

Add 0.5 to it, it moves to the top edge.

Add 1 to it, it appears to have stayed in place but really, it's been all around and back to its original position.

In the next example, I use a gradient from black to a 0.5 grey:

Reminders:

- Don't forget that the distortion texture should be linear (= not Srgb) if you're after a mathematically correct result.(I first made the mistake here and wondered why the result wasn't as exected.)

- You don't have to use black and white textures; you can use two channels to shift the U and V independently. Just use a component mask because UV info requires 2 channels while the RGB of a map is 3.

So. This is what you get from plugging directly the distortion texture in the output texture:

- Don't forget that the distortion texture should be linear (= not Srgb) if you're after a mathematically correct result.(I first made the mistake here and wondered why the result wasn't as exected.)

- You don't have to use black and white textures; you can use two channels to shift the U and V independently. Just use a component mask because UV info requires 2 channels while the RGB of a map is 3.

So. This is what you get from plugging directly the distortion texture in the output texture:

This is what you get from adding the distortion texture to texture coordinates before plugging it in the output texture:

I've been through this quite quickly since we've kinda covered it before and most of you probably know this in and out. Don't hesitate if you have questions.

------------------------------- End of the digression -------------------------------

Now to our example.

Months ago I starting talking about mapping a texture using the world space rather than the UV space.

Here's a second example.

Say you've got a moving object which projects a laser that reveals the smoke in the air. We want our laser material to fake the fact it is lighting some smoke.

Now that I think about it, it might sound a bit previous gen, what with lit particles in every engine nowadays. Still I'll stick to this example because there can be more than one use to a principle. (and because I already spent a few hours on this!)

Whichever way the object moves, the smoke should remain static. You do not want the smoke material to be mapped to the UVs of your geometry or sprite. It should be mapped to the world where it supposedly belongs.

The moving object in my example is actually… fictional. Yes, you have to imagine it, all I've done is the ‘laser’. Which is not much of a laser in the end. I didn’t want to spend too much time polishing something which is not going to be used anywhere so I essentially stuck to the showcase of what this post is about: mapping textures to world space coordinates. I’ll keep the frills and glamour for some other day. (maybe)

Now, what do we have here?

------------------------------- End of the digression -------------------------------

Now to our example.

Months ago I starting talking about mapping a texture using the world space rather than the UV space.

Here's a second example.

Say you've got a moving object which projects a laser that reveals the smoke in the air. We want our laser material to fake the fact it is lighting some smoke.

Now that I think about it, it might sound a bit previous gen, what with lit particles in every engine nowadays. Still I'll stick to this example because there can be more than one use to a principle. (and because I already spent a few hours on this!)

Whichever way the object moves, the smoke should remain static. You do not want the smoke material to be mapped to the UVs of your geometry or sprite. It should be mapped to the world where it supposedly belongs.

The moving object in my example is actually… fictional. Yes, you have to imagine it, all I've done is the ‘laser’. Which is not much of a laser in the end. I didn’t want to spend too much time polishing something which is not going to be used anywhere so I essentially stuck to the showcase of what this post is about: mapping textures to world space coordinates. I’ll keep the frills and glamour for some other day. (maybe)

Now, what do we have here?

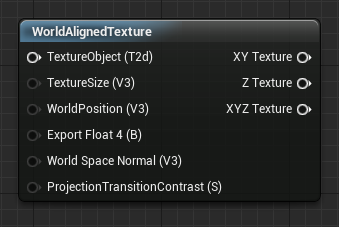

A normal

map is used twice (normal 1 and normal 2 boxes), mapped to world coordinates

with different scales and panning at different speeds. They are brought together to use

as a noise that will distort the UVs of another texture.

Usually you

would add the result to a texture coordinates node. Since we are mapping our

textures to the world, we’re using the world

position setup instead, but the idea is exactly the same.

That goes into two different textures that get added together (all of that to add a bit of complexity so the patterns don't repeat too obviously) and into our output texture at last.

That goes into two different textures that get added together (all of that to add a bit of complexity so the patterns don't repeat too obviously) and into our output texture at last.

Finally, there you go:

The object moves in space and through the smoke.